The Matrix was right: humans will act as batteries

-CAPTION TO THE RIGHT: Brain power: harvesting power from the cerebrospinal fluid within the subarachnoid space. Inset at right: a micrograph of a prototype, showing the metal layers of the anode (central electrode) and cathode contact (outer ring) patterned on a silicon wafer. (Credit: Karolinska Institutet/Stanford University))--

MIT engineers have developed a fuel cell that runs on glucose for powering highly efficient brain implants of the future that can help paralyzed patients move their arms and legs again — batteries included.

The fuel cell strips electrons from glucose molecules to create a small electric current.

The researchers, led by Rahul Sarpeshkar, an associate professor of electrical engineering and computer science at MIT, fabricated the fuel cell on a silicon chip, allowing it to be integrated with other circuits that would be needed for a brain implant.

In the 1970s, scientists showed they could power a pacemaker with a glucose fuel cell, but the idea was abandoned in favor of lithium-ion batteries, which could provide significantly more power per unit area than glucose fuel cells.

These glucose fuel cells also used enzymes that proved to be impractical for long-term implantation in the body, since they eventually ceased to function efficiently.

How to generate hundreds of microwatts from sugar

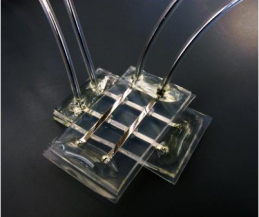

[+]silicon_wafer_glucose

A silicon wafer with glucose fuel cells of varying sizes; the largest is 64 by 64 mm. (credit: Sarpeshkar Lab)

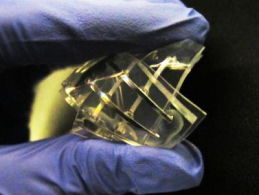

The new fuel cell is fabricated from silicon, using the same technology used to make semiconductor electronic chips, with no biological components.

A platinum catalyst strips electrons from glucose, mimicking the activity of cellular enzymes that break down glucose to generate ATP, the cell’s energy currency. (Platinum has a proven record of long-term biocompatibility within the body.)

So far, the fuel cell can generate up to hundreds of microwatts — enough to power an ultra-low-power and clinically useful neural implant.

Benjamin Rapoport, a former graduate student in the Sarpeshkar lab and the first author on the new MIT study, calculated that in theory, the glucose fuel cell could get all the sugar it needs from the cerebrospinal fluid (CSF) that bathes the brain and protects it from banging into the skull.

There are very few cells in the CSF, so it’s highly unlikely that an implant located there would provoke an immune response, the researchers say.

[+]glucose_fuel_cell

Structure of the glucose fuel cell and the oxygen and glucose concentration gradients crucially associated with its cathode and anode half-cell reactions (credit: Benjamin I. Rapoport, Jakub T. Kedzierski, Rahul Sarpeshkar/PLoS One)

There is also significant glucose in the CSF, which does not generally get used by the body. Since only a small fraction of the available power is utilized by the glucose fuel cell, the impact on the brain’s function would likely be small.

Implantable medical devices

“It will be a few more years into the future before you see people with spinal-cord injuries receive such implantable systems in the context of standard medical care, but those are the sorts of devices you could envision powering from a glucose-based fuel cell,” says Rapoport.

Karim Oweiss, an associate professor of electrical engineering, computer science and neuroscience at Michigan State University, says the work is a good step toward developing implantable medical devices that don’t require external power sources.

“It’s a proof of concept that they can generate enough power to meet the requirements,” says Oweiss, adding that the next step will be to demonstrate that it can work in a living animal.

A team of researchers at Brown University, Massachusetts General Hospital and other institutions recently demonstrated that paralyzed patients could use a brain-machine interface to move a robotic arm; those implants have to be plugged into a wall outlet.

Ultra-low-power bioelectronics

Sarpeshkar’s group is a leader in the field of ultra-low-power electronics, having pioneered such designs for cochlear implants and brain implants. “The glucose fuel cell, when combined with such ultra-low-power electronics, can enable brain implants or other implants to be completely self-powered,” says Sarpeshkar, author of the book Ultra Low Power Bioelectronics.

The book discusses how the combination of ultra-low-power and energy-harvesting design can enable self-powered devices for medical, bio-inspired and portable applications.

Sarpeshkar’s group has worked on all aspects of implantable brain-machine interfaces and neural prosthetics, including recording from nerves, stimulating nerves, decoding nerve signals and communicating wirelessly with implants.

One such neural prosthetic is designed to record electrical activity from hundreds of neurons in the brain’s motor cortex, which is responsible for controlling movement. That data is amplified and converted into a digital signal so that computers — or in the Sarpeshkar team’s work, brain-implanted microchips — can analyze it and determine which patterns of brain activity produce movement.

The fabrication of the glucose fuel cell was done in collaboration with Jakub Kedzierski at MIT’s Lincoln Laboratory. “This collaboration with Lincoln Lab helped make a long-term goal of mine — to create glucose-powered bioelectronics — a reality,” Sarpeshkar says.

Although he has begun working on bringing ultra-low-power and medical technology to market, he cautions that glucose-powered implantable medical devices are still many years away.

Ref.: Benjamin I. Rapoport, Jakub T. Kedzierski, Rahul Sarpeshkar, A Glucose Fuel Cell for Implantable Brain-Machine Interfaces, PLoS ONE, 2012, DOI: 10.1371/journal.pone.0038436 (open access)