January 16, 2013

Robots That Write Our News

It got me wondering how far robotics might have penetrated the media world so i started looking around and found an article by wired on Narrative Science and how they program machines to write news stories.

As it turns out they are already being used by reputable companies like Forbes to write for their websites.

Narrative Science’s algorithms built this article using pitch-by-pitch game data that parents entered into an iPhone app called GameChanger. Last year the software produced nearly 400,000 accounts of Little League games. This year that number is expected to top 1.5 million.

"Friona fell 10-8 to Boys Ranch in five innings on Monday at Friona despite racking up seven hits and eight runs. Friona was led by a flawless day at the dish by Hunter Sundre, who went 2-2 against Boys Ranch pitching. Sundre singled in the third inning and tripled in the fourth inning … Friona piled up the steals, swiping eight bags in all …"

Couldn't notice the difference could you. It may lack a little character but that could easily change. If you think that a computer could never really replace a human at writing stories think again. Robots have already replaced us at many things. Sports writers might have some cause for concern

Narrative Science's co founder Kristian Hammond was recently asked at a conference by wired reporter Steven Levy to predict what percentage of news would be written by computers in 15 years. At first he tried to duck the question, but with some prodding he sighed and gave in: “More than 90 percent.”

He also seems to be pretty optimistic that a computer could win a Pulitzer prize within five years

They can customize their software to act a certain way like purposely omit data like errors at a softball game.Articles that require lots of data and follow a predictable formula or framework like sports and finance are best suited for Narrative science's programs. They can customize the tone of the stories to sound like a breathless financial reporter screaming from a trading floor or a dry sell-side researcher pedantically walking you through it.

Recently they have been trying to tackle other areas outside sports and finance like:

1)writing reviews of the best restaurants.

2)writing a monthly report for its franchise operators that analyzes sales figures, compares them to regional peers, and suggests particular menu items to push.3

3) producing personalized 401(k) financial reports

4)synopses of World of Warcraft sessions—players could get a recap after a big raid that would read as if an embedded journalist had accompanied their guild.

Hammond"s bold vision is within 20 years as more data is available and the programs mature be in every area of writing from commodity news to explanatory journalism and, ultimately, detailed long-form articles.

Article by J5un for Emerging Tech Trends for Transhumanism

You can check out Narrative Science here

Post Update- Since I have written this article I have came across an app for websites that has update real time news. You can check it out at Nozzl.com.

August 14, 2011

“I Would Hope That Saner Minds Would Prevail” Deus Ex: Human Revolution Lead Writer Mary DeMarle on the Ethics of Transhumanism

So when Square Enix decided to pick up the reins from Eidos and create a new installment in the series, Deus Ex: Human Revolution (DX:HR), I was quite excited. The first indication DX:HR was not going to be a crummy exploitation of the original’s success (see: Deus Ex 2: Invisible War), was the teaser trailer, shown above. Normally, a teaser trailer is just music and a slow build to a logo or single image that lets you know the game is coming out. Instead, the development team decided to demonstrate that it was taking the philosophy of the game seriously.

What philosophy? you might ask. Why transhumanism, of course. Nick Bostrom, chair of the Future of Humanity Institute at Oxford, centers the birth of transhumanism in the Renaissance and the Age of the Enlightenment in his article “A History of Transhumanist Thought” [pdf]. The visuals of the teaser harken to Renaissance imagery (such as the Da Vinci style drawings) and the teaser ends with a Nietzschean quote “Who we are is but a stepping stone to what we can become.” Later trailers would reference Icarus and Daedalus (who also happened to be the names of AI constructs in the original game), addressing the all-too-common fear that by pursuing technology, we are pursuing our own destruction. This narrative thread has become the central point of conflict in DX:HR. Even its viral ad campaign has been told through two lenses: that of Sarif Industries, maker of prosthetic bodies that change lives, and that of Purity First, a protest group that opposes human augmentation. The question is: upon which part of our shared humanity do we step as we climb to greater heights?

Read rest of original article here

August 3, 2011

Trends that’ll change the world in 10 years

Virtual species

Virtual humans, both physical (robots) and online avatars will be added to the workforce. By 2020, robots will be physically superior to humans. IBM’s Blue Brain project, for instance, is a 10-year mission to create a human brain using hardware and software.

Virtual humans, both physical (robots) and online avatars will be added to the workforce. By 2020, robots will be physically superior to humans. IBM’s Blue Brain project, for instance, is a 10-year mission to create a human brain using hardware and software. “They believe that within a decade they’ll start to see consciousness emerge with this brain,” Evans says. By 2025, the robot population will surpass the number of humans in the developed world. By 2032, robots will be mentally superior to humans. And by 2035, robots could completely replace humans in the workforce. Beyond that is the creation of sophisticated avatars.

Evans points to IBM’s Watson as a template for the virtual human. Watson was able to answer a question by returning a single, accurate result. A patient may use a virtual machine instead of a WebMD search. Or hospitals can augment patient care with virtual machines. Augmented reality and gesture-based computing will enter our classrooms, medical facilities and communications, and transform them as well.

The Internet Of Things

We have passed the threshold where more things than people are connected to the Net. The transition to IPv6 supports limitless connectivity. By 2020, there will be more than six Net-linked devices for every person on Earth. Currently, most of us are connected to Net full-time through three or more devices like PC, phones, TV etc. Next up are sensor networks, using low-power sensors that “collect, transmit, analyze and distribute data on a massive scale,” says Evans.

We have passed the threshold where more things than people are connected to the Net. The transition to IPv6 supports limitless connectivity. By 2020, there will be more than six Net-linked devices for every person on Earth. Currently, most of us are connected to Net full-time through three or more devices like PC, phones, TV etc. Next up are sensor networks, using low-power sensors that “collect, transmit, analyze and distribute data on a massive scale,” says Evans.An ‘Internet of things’ means that everything from electronic dust motes to “connected shoes” to household appliances can be connected to a network and assigned an IP address. Sensors are being embedded in shoes, asthma inhalers, and surgery devices. There’s even a tree in Sweden wired with sensors that tweets its mood and thoughts, with a bit of translation help from an interpretive engine developed by Ericsson (@connectedtree or #ectree).

Quantum networking

Connectivity will continue to evolve, Evans predicts, and networks of tomorrow will be orders of magnitude faster than they are today. The network connectivity 10 years from now will see improvement by 30 lakh times.

Multi-terabit networks using lasers are being explored. And early work is happening on a concept called “quantum networking” based on quantum physics. This involves “quantum entanglement” in which two particles are entangled after which they can be separated by any distance, and when one is changed, the other also changes instantly. Production, though, is not imminent.

Zettabyte Era

By 2015, one zettabyte of data will flow over the Internet. One zettabyte equals stack of books from Earth to Pluto 20 times. “This is the same as every person on Earth tweeting for 100 years, or 125 million years of your favourite one-hour TV show,” says Evans. Our love of high-definition video accounts for much of the increase. By Cisco’s count, 91% of Internet data in 2015 will be video.

By 2015, one zettabyte of data will flow over the Internet. One zettabyte equals stack of books from Earth to Pluto 20 times. “This is the same as every person on Earth tweeting for 100 years, or 125 million years of your favourite one-hour TV show,” says Evans. Our love of high-definition video accounts for much of the increase. By Cisco’s count, 91% of Internet data in 2015 will be video.And what’s more, he said, the data itself is becoming richer, with every surface — from tables to signs — becoming a digital display, and images evolving from megapixel, to gigapixel, to terapixel definition. So, the so-called “zettaflood” will require vastly improved networks to move more data, and not drop the ball (or the packets) of our beloved video.

Adaptive technology

Technology is finally adapting to us. Evans cites image recognition, puzzle resolution, augmented reality and gesture-based computing as key examples of such technologies.

Technology is finally adapting to us. Evans cites image recognition, puzzle resolution, augmented reality and gesture-based computing as key examples of such technologies.A technology called 3D printing will allow us to instantly manufacture any physical item, from food to bicycles, using printer technology. Through 3D printing, people in the future will download things as easily as they download music.

“3D printing is the process of joining materials to make objects from 3D model data, usually layer upon layer,” says Evans, adding: “It is not far that we will be able to print human organs.” In March, Dr Anthony Atala from Wake Forest Institute for Regenerative Medicine printed a proof-of-concept kidney mold onstage at TED. It was not living tissue, but the point was well-made.

A better you

“We think nothing of using pacemakers,” Evans points out. In the next 10 years, medical technologies will grow vastly more sophisticated as computing power becomes available in smaller forms. Devices like nanobots and the ability to grow replacement organs from our own tissues will be the norm. “The ultimate integration may be brain-machine interfaces that eventually allow people with spinal cord injuries to live normal lives,” he says.

Today we have mind-controlled video games and wheelchairs, software by Intel that can scan the brain and tell what you are thinking and tools that can actually predict what you are going to do before you do it.

Cloud computing

By 2020, one-third of all data will live in or pass through the cloud. IT spending on innovation and cloud computing could top $1 trillion by 2014.

By 2020, one-third of all data will live in or pass through the cloud. IT spending on innovation and cloud computing could top $1 trillion by 2014. Right now, the voice search on an Android phone sends the query to Google cloud to decipher and return results. “We’ll see more intelligence built into communication. Things like contextual and location-based information.”

With an always-connected device, the network can be more granular with presence information, tapping into a personal sensor to know that a person’s asleep, and route an incoming call to voicemail. Or knowing that person is traveling at 60 mph in a car, and that this is not the time for a video call.

Power of Power

How are all networked devices going to be powered, and who or what is going to power them? The answer, says Evans, lies in small things. Solar arrays will become increasingly important.

How are all networked devices going to be powered, and who or what is going to power them? The answer, says Evans, lies in small things. Solar arrays will become increasingly important. Technologies to make this more economically pragmatic are on their way. Sandia produces solar cells with 100 times less material/same efficiency. MIT technology allows windows to generate power without blocking view.

Inkjet printer produces solar cells with 90 per cent decrease in waste at significantly lower costs. Anything that generates or needs energy, Evans says, will be connected to or managed by an intelligent network.

World Is Flat

The ability of people to connect with each other all around the world, within seconds, via social media isn’t just a social phenomenon, Evans says it’s a flattening out of who has access to technology. He cited the example of Wael Ghonim, the Middle East-based Google engineer whose Facebook page, “We are all Khaled Saeed,” was a spark in the Egyptian uprising and one of the key events of the Arab Spring.

The ability of people to connect with each other all around the world, within seconds, via social media isn’t just a social phenomenon, Evans says it’s a flattening out of who has access to technology. He cited the example of Wael Ghonim, the Middle East-based Google engineer whose Facebook page, “We are all Khaled Saeed,” was a spark in the Egyptian uprising and one of the key events of the Arab Spring.A smaller world also means faster information dissemination. The capture, dissemination and consumption of events are going from “near time” to “real time.” This in turn will drive more rapid influence among cultures.

Self-designed evolution

March 2010: Retina implant restores vision to blind patients.

March 2010: Retina implant restores vision to blind patients.April 2010: Trial of artificial pancreas starts

June 2011: Spinning heart (no pulse, no clogs and no breakdowns) developed.

Stephen Hawking says, “Humans are entering a stage of self-designed evolution.”

Taking the medical technology idea to the next level, healthy humans will be given the tools to augment themselves. While the early use of these technologies will be to repair unhealthy tissue or fix the consequences of brain injury, eventually designer enhancements will be available to all.

Ultimately, humans will use so much technology to mend, improve or enhance our bodies, that we will become Cyborgs. Futurist Ray Kurzweil is pioneering this idea with a concept he calls singularity, the point at which man and machine merge and become a new species. (Kurzweil says this will happen by 2054).

—Compiled by Beena Kuruvilla

July 28, 2011

The Walk Again Project

As a result, BMI research raises the hope that in the not-too-distant future, patients suffering from a variety of neurological disorders that lead to devastating levels of paralysis may be able to recover their mobility by harnessing their own brain impulses to directly control sophisticated neuroprostheses.

The Walk Again Project, an international consortium of leading research centers around the world represents a new paradigm for scientific collaboration among the world’s academic institutions, bringing together a global network of scientific and technological experts, distributed among all the continents, to achieve a key humanitarian goal.

The project’s central goal is to develop and implement the first BMI capable of restoring full mobility to patients suffering from a severe degree of paralysis. This lofty goal will be achieved by building a neuroprosthetic device that uses a BMI as its core, allowing the patients to capture and use their own voluntary brain activity to control the movements of a full-body prosthetic device. This “wearable robot,” also known as an “exoskeleton,” will be designed to sustain and carry the patient’s body according to his or her mental will.

In addition to proposing to develop new technologies that aim at improving the quality of life of millions of people worldwide, the Walk Again Project also innovates by creating a complete new paradigm for global scientific collaboration among leading academic institutions worldwide. According to this model, a worldwide network of leading scientific and technological experts, distributed among all the continents, come together to participate in a major, non-profit effort to make a fellow human being walk again, based on their collective expertise. These world renowned scholars will contribute key intellectual assets as well as provide a base for continued fundraising capitalization of the project, setting clear goals to establish fundamental advances toward restoring full mobility for patients in need.

Walk again Project Homepage

July 18, 2011

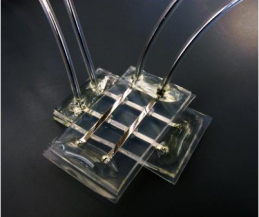

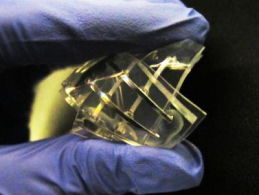

Soft memory device opens door to new biocompatible electronics

|

| A memory device with the physical properties of Jell-O that functions well in wet environments (credit: Michael Dickey, North Carolina State University) |

North Carolina State University researchers have developed a soft memory device design that functions well in wet environments and has memristor-like characteristics, opening the door to new types of smart biocompatible electronic devices.

A memristor (“memory resistor”) is an electronic device that changes its resistive state depending on the current or voltage history through the device.

The ability to function in wet environments and the biocompatibility of the gels mean that this technology holds promise for interfacing electronics with biological systems and medical monitoring, such as cells, enzymes or tissue.

The device is made using a liquid alloy of gallium and indium metals set into water-based gels. When the alloy electrode is exposed to a positive charge, it creates an oxidized skin that makes it resistive to electricity (a “0″ state).

When the electrode is exposed to a negative charge, the oxidized skin disappears, and it becomes conductive to electricity (a “1″ state).

Ref.: Orlin D. Velev, et al., Towards All-Soft Matter Circuits: Prototypes of Quasi-Liquid Devices with Memristor Characteristics, Advanced Materials, 2011; [DOI: 10.1002/adma.201101257]

Original Article by the Editor of Kurzweilai.net

October 3, 2009

It's tempting to call them lords of the flies. For the first time, researchers have controlled the movements of free-flying insects from afar, as if t

Green beetles

The Berkeley team implanted electrodes into the brain and muscles of two species: green June beetles called Cotinus texana from the southern US, and the much larger African species Mecynorrhina torquata. Both responded to stimulation in much the same way, but the weight of the electronics and their battery meant that only Mecynorrhina – which can grow to the size of a human palm – was strong enough to fly freely under radio control.

A particular series of electrical pulses to the brain causes the beetle to take off. No further stimulation is needed to maintain the flight. Though the average length of flights during trials was just 45 seconds, one lasted for more than 30 minutes. A single pulse causes a beetle to land again.

The insects' flight can also be directed. Pulses sent to the brain trigger a descent, on average by 60 centimetres. The beetles can be steered by stimulating the wing muscle on the opposite side from the direction they are required to turn, though this works only three-quarters of the time. After each manoeuvre, the beetles quickly right themselves and continue flying parallel to the ground.

Brain insights

Tyson Hedrick, a biomechanist at the University of North Carolina, Chapel Hill, who was not involved in the research, says he is surprised at the level of control achieved, because the controlling impulses were delivered to comparatively large regions of the insect brain.

Precisely stimulating individual neurons or circuits may harness the beetles more precisely, he told New Scientist, but don't expect aerial acrobatics. "It's not entirely clear how much control a beetle has over its own flight," Hedrick says. "If you've ever seen a beetle flying in the wild, they're not the most graceful insects."

The research may be more successful in revealing just how the brain, nerves and muscles of insects coordinate flight and other behaviours than at bringing six-legged cyborg spies into service, Hedrick adds. "It may end up helping biologists more than it will help DARPA."

Brain-recording backpacks

It's a view echoed by Reid Harrison, an electrical engineer at the University of Utah, Salt Lake City, who has designed brain-recording backpacks for insects. "I'm sceptical about their ability to do surveillance for the following reason: no one has solved the power issue."

Batteries, solar cells and piezoelectrics that harvest energy from movement cannot provide enough power to run electrodes and radio transmitters for very long, Harrison says. "Maybe we'll have some advances in those technologies in the near future, but based on what you can get off the shelf now it's not even close."

Journal reference: Frontiers in Integrative Neuroscience, DOI: 10.3389/neuro.07.024.2009

September 28, 2009

The Reality of Robot Surrogates

How far are we from sending robots into the world in our stead?

Imagine a world where you're stronger, younger, better looking, and don't age. Well, you do, but your robot surrogate—which you control with your mind from a recliner at home while it does your bidding in the world—doesn't.It's a bit like The Matrix, but instead of a computer-generated avatar in a graphics-based illusion, in Surrogates—which opens Friday and stars Bruce Willis—you have a real titanium-and-fluid copy impersonating your flesh and blood and running around under your mental control. Other recent films have used similar concepts to ponder issues like outsourced virtual labor (Sleep Dealer) and incarceration (Gamer).

The real technology behind such fantastical fiction is grounded both in far-out research and practical robotics. So how far away is a world of mind-controlled personal automatons?

"We're getting there, but it will be quite a while before we have anything that looks like Bruce Willis," says Trevor Blackwell, the founder and CEO of Anybots, a robotics company in Mountain View, Calif., that builds "telepresence" robots controlled remotely like the ones in Surrogates.

Telepresence is action at a distance, or the projection of presence where you physically aren't. Technically, phoning in to your weekly staff meeting is a form of telepresence. So is joysticking a robot up to a suspected IED in Iraq so a soldier can investigate the scene while sitting in the (relative) safety of an armored vehicle.

Researchers are testing brain-machine interfaces on rats and monkeys that would let the animals directly control a robot, but so far the telepresence interfaces at work in the real world are physical. Through wireless Internet connections, video cameras, joysticks, and sometimes audio, humans move robots around at the office, in the operating room, underwater, on the battlefield, and on Mars.

A recent study by NextGen Research, a market research firm, projects that in the next five years, telepresence will become a significant feature of the US $1.16 billion personal robotics market, meaning robots for you or your home.

According to the study's project manager, Larry Fisher, telepresence "makes the most sense" for security and surveillance robots that would be used to check up on pets or family members from far away. Such robots could also allow health-care professionals to monitor elderly people taking medication at home to ensure the dosage and routine are correct.

Right now, most commercial teleoperated robots are just mobile webcams with speakers, according to NextGen. They can be programmed to roam a set path, or they can be controlled over the Internet by an operator. iRobot, the maker of the Roomba floor cleaner, canceled its telepresence robot, ConnectR, in January, choosing to wait until such a robot would be easier to use. But plenty of companies, such as Meccano/Erector and WowWee, are marketing personal telepresence bots.

Blackwell's Anybots, for example, has developed an office stand-in called QA. It's a Wi-Fi enabled, vaguely body-shaped wheeled robot with an ET-looking head that has cameras for eyes and a display in its chest that shows an image of the person it's standing in for. You can slap on virtual-reality goggles, sensor gloves, and a backpack of electronics to link to it over the Internet for an immersive telepresence experience. Or you can just connect to the robot through your laptop's browser.

For the rest of the article go to ieee spectrum

Original article posted by Anne-Marie Corley // September 2009

September 26, 2009

Honda's U3-X Personal Mobility Device is the Segway of unicycles

September 24, 2009

Stimulating Sight: Retinal Implant Could Help Restore Useful Level Of Vision To Certain Groups Of Blind People

The eye implant is designed for people who have lost their vision from retinitis pigmentosa or age-related macular degeneration, two of the leading causes of blindness. The retinal prosthesis would take over the function of lost retinal cells by electrically stimulating the nerve cells that normally carry visual input from the retina to the brain.

Such a chip would not restore normal vision but it could help blind people more easily navigate a room or walk down a sidewalk.

"Anything that could help them see a little better and let them identify objects and move around a room would be an enormous help," says Shawn Kelly, a researcher in MIT's Research Laboratory for Electronics and member of the Boston Retinal Implant Project.

The research team, which includes scientists, engineers and ophthalmologists from Massachusetts Eye and Ear Infirmary, the Boston VA Medical Center and Cornell as well as MIT, has been working on the retinal implant for 20 years. The research is funded by the VA Center for Innovative Visual Rehabilitation, the National Institutes of Health, the National Science Foundation, the Catalyst Foundation and the MOSIS microchip fabrication service.

Led by John Wyatt, MIT professor of electrical engineering, the team recently reported a new prototype that they hope to start testing in blind patients within the next three years.

Electrical stimulation

Patients who received the implant would wear a pair of glasses with a camera that sends images to a microchip attached to the eyeball. The glasses also contain a coil that wirelessly transmits power to receiving coils surrounding the eyeball.

When the microchip receives visual information, it activates electrodes that stimulate nerve cells in the areas of the retina corresponding to the features of the visual scene. The electrodes directly activate optical nerves that carry signals to the brain, bypassing the damaged layers of retina.

One question that remains is what kind of vision this direct electrical stimulation actually produces. About 10 years ago, the research team started to answer that by attaching electrodes to the retinas of six blind patients for several hours.

When the electrodes were activated, patients reported seeing a small number of "clouds" or "drops of blood" in their field of vision, and the number of clouds or blood drops they reported corresponded to the number of electrodes that were stimulated. When there was no stimulus, patients accurately reported seeing nothing. Those tests confirmed that retinal stimulation can produce some kind of organized vision in blind patients, though further testing is needed to determine how useful that vision can be.

After those initial tests, with grants from the Boston Veteran's Administration Medical Center and the National Institutes of Health, the researchers started to build an implantable chip, which would allow them to do more long-term tests. Their goal is to produce a chip that can be implanted for at least 10 years.

One of the biggest challenges the researchers face is designing a surgical procedure and implant that won't damage the eye. In their initial prototypes, the electrodes were attached directly atop the retina from inside the eye, which carries more risk of damaging the delicate retina. In the latest version, described in the October issue of IEEE Transactions on Biomedical Engineering, the implant is attached to the outside of the eye, and the electrodes are implanted behind the retina.

That subretinal location, which reduces the risk of tearing the retina and requires a less invasive surgical procedure, is one of the key differences between the MIT implant and retinal prostheses being developed by other research groups.

Another feature of the new MIT prototype is that the chip is now contained in a hermetically sealed titanium case. Previous versions were encased in silicone, which would eventually allow water to seep in and damage the circuitry.

While they have not yet begun any long-term tests on humans, the researchers have tested the device in Yucatan miniature pigs, which have roughly the same size eyeballs as humans. Those tests are only meant to determine whether the implants remain functional and safe and are not designed to observe whether the pigs respond to stimuli to their optic nerves.

So far, the prototypes have been successfully implanted in pigs for up to 10 months, but further safety refinements need to be made before clinical trials in humans can begin.

Wyatt and Kelly say they hope that once human trials begin and blind patients can offer feedback on what they're seeing, they will learn much more about how to configure the algorithm implemented by the chip to produce useful vision.Patients have told them that what they would like most is the ability to recognize faces. "If they can recognize faces of people in a room, that brings them into the social environment as opposed to sitting there waiting for someone to talk to them," says Kelly.

Journal reference:

- Shire, D. B.; Kelly, S. K.; Chen , J.; Doyle , P.; Gingerich, M. D.; Cogan, S. F.; Drohan, W. A.; Mendoza, O.; Theogarajan, L.; Wyatt, J. L.; Rizzo, J. F. Development and Implantation of a Minimally Invasive Wireless Subretinal Neurostimulator. IEEE Transactions on Biomedical Engineering, October 2009 DOI: 10.1109/TBME.2009.2021401

September 23, 2009

Video surveillance system that reasons like a human brain

BRS Labs announced a video-surveillance technology called Behavioral Analytics, which leverages cognitive reasoning, and processes visual data on a level similar to the human brain.

BRS Labs announced a video-surveillance technology called Behavioral Analytics, which leverages cognitive reasoning, and processes visual data on a level similar to the human brain.It is impossible for humans to monitor the tens of millions of cameras deployed throughout the world, a fact long recognized by the international security community. Security video is either used for forensic analysis after an incident has occurred, or it employs a limited-capability technology known as Video Analytics – a video-motion and object-classification-based software technology that attempts to watch video streams and then sends an alarm on specific pre-programmed events. The problem is that this legacy solution generates a great number of false alarms that effectively renders it useless in the real world.

BRS Labs has created a technology it calls Behavioral Analytics. It uses cognitive reasoning, much like the human brain, to process visual data and to identify criminal and terroristic activities. Built on a framework of cognitive learning engines and computer vision, AISight, provides an automated and scalable surveillance solution that analyzes behavioral patterns, activities and scene content without the need for human training, setup, or programming.

The system learns autonomously, and builds cognitive “memories” while continuously monitoring a scene through the “eyes” of a CCTV security camera. It sees and then registers the context of what constitutes normal behavior, and the software distinguishes and alerts on abnormal behavior without requiring any special programming, definition of rules or virtual trip lines.

AISight is currently fielded across a wide variety of global critical infrastructure assets, protecting major international hotels, banking institutions, seaports, nuclear facilities, airports and dense urban areas plagued by criminal activity.

Original article by Helpnet security

September 16, 2009

Cyborg crickets could chirp at the smell of survivors

September 4, 2009

Go to hospital to see computing's future

Enlightened climb

Guided by eyes

Mind control

Seal of approval

August 24, 2009

Craig Venter: Programming algae to pump out oil

Profile

Craig Venter made his name sequencing the human genome. He is founder/CEO of Synthetic Genomics, which has begun a $600 million project with Exxon to transform the oil industryThis article was originally written by Catherine Brahic on july 25,2009 for new scientist

Recommended Reading

Biodesign: The Process of Innovating Medical Technologies

August 10, 2009

Get smarter

Originally published by The atlantic

Seventy-four thousand years ago, humanity nearly went extinct. A super-volcano at what’s now Lake Toba, in Sumatra, erupted with a strength more than a thousand times that of Mount St. Helens in 1980. Some 800 cubic kilometers of ash filled the skies of the Northern Hemisphere, lowering global temperatures and pushing a climate already on the verge of an ice age over the edge. Some scientists speculate that as the Earth went into a deep freeze, the population of Homo sapiens may have dropped to as low as a few thousand families.

The Mount Toba incident, although unprecedented in magnitude, was part of a broad pattern. For a period of 2 million years, ending with the last ice age around 10,000 B.C., the Earth experienced a series of convulsive glacial events. This rapid-fire climate change meant that humans couldn’t rely on consistent patterns to know which animals to hunt, which plants to gather, or even which predators might be waiting around the corner.

How did we cope? By getting smarter. The neurophysiologist William Calvin argues persuasively that modern human cognition—including sophisticated language and the capacity to plan ahead—evolved in response to the demands of this long age of turbulence. According to Calvin, the reason we survived is that our brains changed to meet the challenge: we transformed the ability to target a moving animal with a thrown rock into a capability for foresight and long-term planning. In the process, we may have developed syntax and formal structure from our simple language.

Read rest of article -->here<--

May 30, 2009

Look out, Rover. Robots are man's new best friend

Industrial robots comprise a roughly $18 billion annual market, according to the International Federation of Robotics. There are going to be a lot more of them, as they move into homes, hospitals, classrooms, and barracks. NextGen Research has estimated that the worldwide market for consumer-oriented service robots will hit $15 billion by 2015.... Read original article here-->

May 26, 2009

Despite 'Terminator,' machines still on our side: Scientists say AI will be humanity's 'Salvation'

Daily News May 26, 2009 AI experts including Reid Simmons of the Robotics Institute at Carnegie Mellon University and Ray Kurzweil say the post-apocalyptic "Terminator Salvation" scenario is unlikely. "Kurzweil believes the technical advancement of the next few decades will herald a literal rewiring of the human brain. Given the shrinking costs of nanotechnology,... Read original article here-->

May 25, 2009

Will Google be able to predict the future?

Recently the Big "G's" research team has hinted that the real-time search results could possibly predict the future as well as seeing the present

Two researchers at google combined data from google trends most popular search terms with an economists models that were used to predict trends in things like travel and home sales. This turned out to be a great success and yielded better forecasts in almost every case.

Recently google showed that search data can predict flu outbreaks which that could just be the beginning. An addition of real time data could add tremendously to the predictive power

In another related story a researchers at Los Alamos Labratory in 2006 applied a mood rating system to the text of FutureMe emails to gauge people's fears,hopes for the future.In case you don't know who future me is they allow you to send yourself an email dated to arrive at a future date up to 30 years

What hey found was that between 2007/2012 the emails were more depressed. Is it possible they could have predicted the recession we now face?

One of the researcher John Bollen will be doing more future research on emotional trends on twitter as well

May 7, 2009

TRANSCENDENT MAN

The film was designed to showcase the ideas in a sympathetic but not uncritical light:

Bookmark & Share

Research gives clues for self-cleaning materials, water-striding robots

Self-cleaning walls, counter tops,

fabrics, even micro- robots that can walk on water could be closer to reality because of research on super-hydrophobic materials by scien- tists at the University of Nebraska-Lincoln and at Japan's RIKEN institute. Using the supercomputer at RIKEN (the fastest in the world when the research started in 2005), the...

May 5, 2009

Can E-Readers Save the Daily Press?

The recession-ravaged newspaper and magazine industries are hoping for their own knight in shining digital armor, in the form of portable reading devices with big screens.Unlike tiny mobile phones and devices like the Kindle that are made to display text from books, these new gadgets, with screens roughly the size of a standard sheet of paper, could present much of the editorial and advertising content of traditional periodicals in generally the same format as they appear in print. And they might be a way to get readers to pay for those periodicals — something they have been reluctant to do on the Web.