THE GIST

|

A glass dish contains a "brain" --

a living network of

25,000 rat brain cells connected to

an array of 60 electrodes.

|

- This gradually formed a neural network -- a brain.

- The research could lead to tiny, brain-controlled prosthetic devices and unmanned airplanes flown by living computers.

A University of Florida scientist has created a living "brain" of cultured rat cells that now controls an F-22 fighter jet flight simulator.

Scientists say the research could lead to tiny, brain-controlled prosthetic devices and unmanned airplanes flown by living computers.

And if scientists can decipher the ground rules of how such neural networks function, the research also may result in novel computing systems that could tackle dangerous search-and-rescue jobs and perform bomb damage assessment without endangering humans.

NEWS: Brain in a Dish Comes Alive

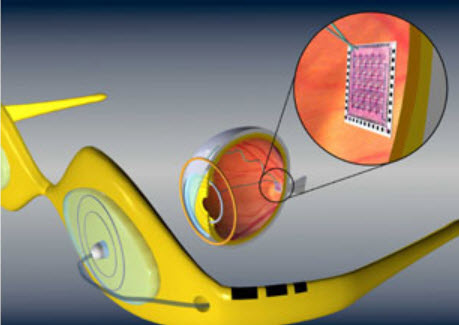

Additionally, the interaction of the cells within the lab-assembled brain also may allow scientists to better understand how the human brain works. The data may one day enable researchers to determine causes and possible non-invasive cures for neural disorders, such as epilepsy.For the recent project, Thomas DeMarse, a University of Florida professor of biomedical engineering, placed an electrode grid at the bottom of a glass dish and then covered the grid with rat neurons. The cells initially resembled individual grains of sand in liquid, but they soon extended microscopic lines toward each other, gradually forming a neural network -- a brain -- that DeMarse says is a "living computational device."

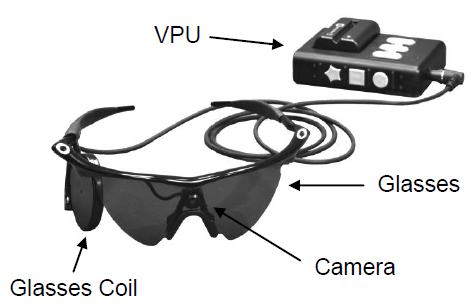

The brain then communicates with the flight simulator through a desktop computer.

"We grow approximately 25,000 cells on a 60-channel multi-electrode array, which permits us to measure the signals produced by the activity each neuron produces as it transmits information across this network of living neurons," DeMarse told Discovery News. "Using these same channels (electrodes) we can also stimulate activity at each of the 60 locations (electrodes) in the network. Together, we have a bidirectional interface to the neural network where we can input information via stimulation. The network processes the information, and we can listen to the network's response."

The brain can learn, just as a human brain learns, he said. When the system is first engaged, the neurons don't know how to control the airplane; they don't have any experience.

NEWS: Petri Dish Brain Has 'Short-term Memory'

But, he said, "Over time, these stimulations modify the network's response such that the neurons slowly (over the course of 15 minutes) learn to control the aircraft. The end result is a neural network that can fly the plane to produce relatively stable straight and level flight."At present, the brain can control the pitch and roll of the F-22 in various virtual weather conditions, ranging from hurricane-force winds to clear blue skies.

This brain-controlled plane may sound like science fiction, but it is grounded in work that has been taking place for more than a decade. A breakthrough occurred in 1993, when a team of scientists created a Hybrot, which is short for "hybrid robot."

The robot consisted of hardware, computer software, rat neurons, and incubators for those neurons. The computer, programmed to respond to the neuron impulses, controlled a wheel underneath a machine that resembled a child's toy robot.

Last year, U.S. and Australian researchers used a similar neuron-controlled robotic device to produce a "semi-living artist." In this case, the neurons were hooked up to a drawing arm outfitted with different colored markers. The robot managed to draw decipherable pictures -- albeit it bad ones that resembled child scribbles -- but that technology led to today's fighter plane simulator success.

Steven Potter, an assistant professor of biomedical engineering at Georgia Tech who directed the living artist project, believes DeMarse's work is important, and that such studies could lead to a variety of engineering and neurobiology research goals.

"A lot of people have been interested in what changes in the brains of animals and people when they are learning things," Potter said. "We're interested in getting down into the network and cellular mechanisms, which is hard to do in living animals. And the engineering goal would be to get ideas from this system about how brains compute and process information."

Though the "brain" can successfully control a flight simulation program, more elaborate applications are a long way off, DeMarse said.

"We're just starting out. But using this model will help us understand the crucial bit of information between inputs and the stuff that comes out," he said. "And you can imagine the more you learn about that, the more you can harness the computation of these neurons into a wide range of applications."